Hello! Thank you for reading In Harms Way.

I was inspired to write this letter after hearing tech leaders in claim that we have exhausted the extent human knowledge in AI training. If that were true, what would it mean? How would it influence the evolution of digital technologies, and our experience of them? In this post I roughly sketch my reflections on the subject.

I recognize this is coming at a time when the media is saturated by talk of AI—I apologize. I promise that I started thinking about this long before I knew anything about DeepSeek! If more talk of AI seems like too much, please take the time you would have spent reading this and do something outside of the digital world. I suspect that it would be time that is far better spent.

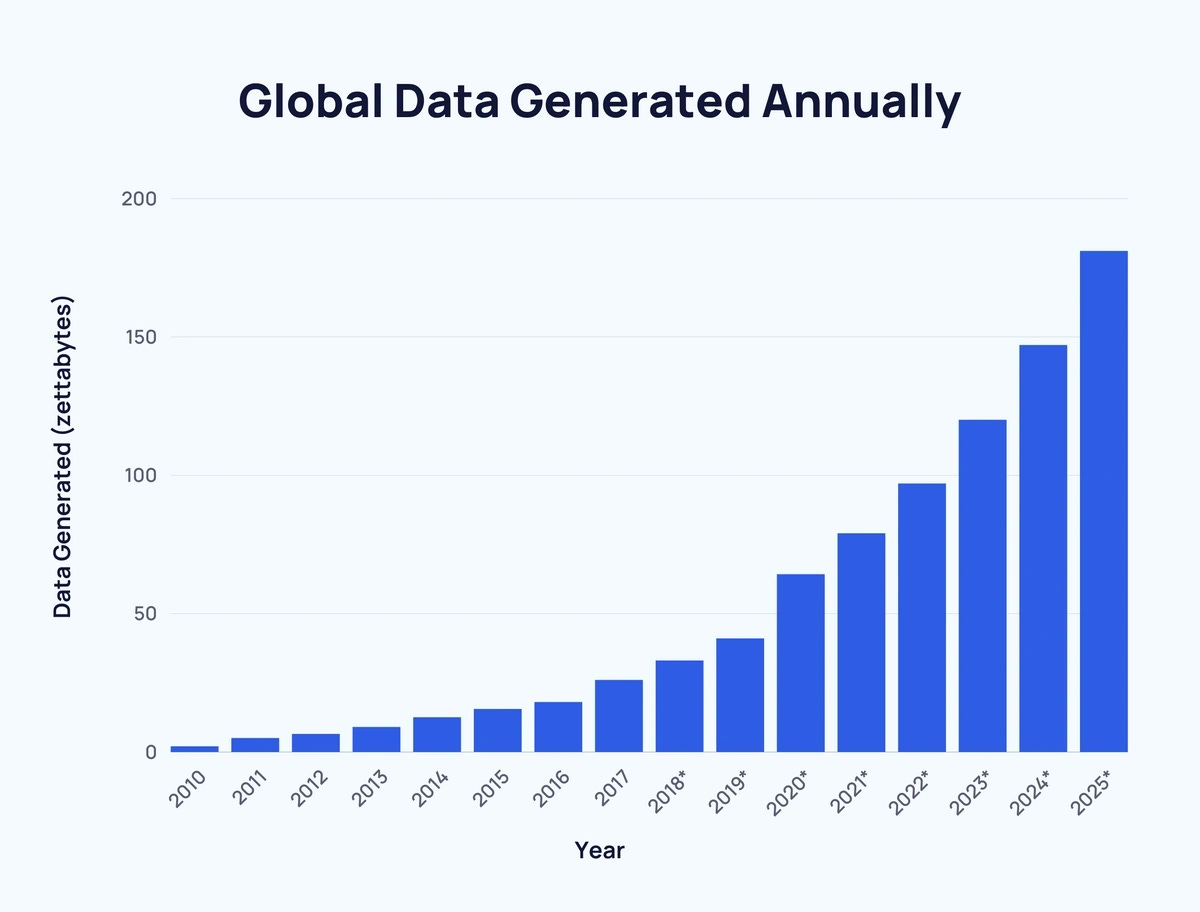

Some estimates suggest that humanity will generate 175 Zettabytes of data in 2025.1

Now, I know what you’re thinking… Yes, “Zettabytes” is a real word. The prefix Zetta represents 10 to the power of 21. That’s one billion trillions. So, in other words, we will create 175 billion trillion—175,000,000,000,000,000,000,000—bytes of data in computers, data centers, and smart toasters across the world in 2025.

This probably doesn’t mean much to you. It didn’t to me either—I start losing track of scale after 3 or 4 zeros. Let me try to put it into perspective:

If we attempted to permanently store 175 Zettabytes of data, it would require over 2 square kilometers of state-of-the-art hard drives stacked 3 meters deep.2 That’s over 4,000 football fields and $5.8 trillion worth of servers—a space larger than New York City and costing more than the GDP of Germany. Oh, and that’s without any supporting infrastructure, like cooling, power, or personnel.

In terms of digital content, 175 Zettabytes equates to 100 million years of 8k video, 7 million million high resolution images, or 2 billion billion emails.3 This suggests that there will almost surely be more images of people stored, copied, or generated in 2025 than people who have physically set foot on earth. For an analogy familiar to readers here on Substack, 175 Zb is enough data to store all books ever written (according to Google)4 over 1 billion times.5 And that is only the data generated in 2025.

Needless to say, we use a lot of data—and our usage is increasing exponentially. Some estimates put the annual growth rate to be over 20%, on average.6

These are astronomical figures (and I mean astronomical literally; estimates put the number of stars in the observable universe in the Zetta range).7 That much data encompasses all of Tik Tok, YouTube, Netflix, Facebook, and Wikipedia. All of human knowledge and experience, both innocuous and nefarious, exists in the digital ether with which we have come to know as the internet.

Given such a grand scale of data, it may come across as surprising to hear techsters like Elon Musk claim that “We’ve now exhausted basically the cumulative sum of human knowledge … in AI training.”

Now, I’m not here to debate whether or not Musk’s statement is true. Anything from the mouth of Musk is likely overhyped and deserves scrutiny. However, the point he is making represents the perspective of many more credible figures in the AI industry.8 AI technology has become so efficient that it is essentially possible to parse all of the important parts of human knowledge from the 175 Zb of data we generate annually. If data is the new oil, it seems like the reservoirs are drying up—if it hasn’t yet, it will eventually. What happens if we use up our data? Can significant progress still be made in AI if the sources of fresh data are depleted? What are the societal implications? Let’s dig in.

A Note on AI Models

Before we can make progress answering these questions, we have to first develop an understanding of what, exactly, AI is. If you are already comfortable with the subject, feel free to jump ahead to the next section!

Generally speaking, AI is any use of computers for tasks that typically require human intelligence. For example, calculators are a form of AI. When wielding my TI-89 to conquer engineering assignments in undergrad, I was solving problems with AI. Another example is a web search (I mean the good ol’ kind from the mid 2010’s). The algorithms used to search the web are also a certain brand of artificial intelligence. None of these examples seem scary or impressive anymore, which is an important fact to remember when considering the ChatGPT’s of the modern era of AI.

As you can see, artificial intelligence is a much broader field than is commonly recognized. Today, when people talk about AI, they are generally referring to the subcategory of Machine Learning, or ML (actually, to be even more specific, they refer to a subset of ML called deep learning, which relies on neural networks). In ML, data is used to train a model to interpret new data outside of the training set. It is some function that takes data as an input and gives data as an output, either in the same form or in some other form.

In the ML framework, the operative word is model. If you are not entrenched in the technical details of ML and AI like I am, this may seem like a strange choice. Why not go with bot, agent, operator, or some other more active noun instead? (I recognize that some of these words are used in the AI literature, but they are secondary definitions of the more fundamental model, as in Large Language Model such as ChatGPT.) In fact, the use of model to describe an AI derives from the mathematical context from which ML has evolved: statistical regression. Every ML model, from face recognition to GPT 4o and DeepSeek, is essentially a regression over data.

All models are wrong, but some are useful. — George E. P. Box

So, what exactly is regression? I think that it is easiest to explain this with a simple equation. Don’t run away! I promise, I will explain it in lots of detail. A general form of a regression (or AI model) is expressed as:

See? Easy! Just kidding… Let me explain.

In this equation, X represents input data and Y represents output data. The subscript i indicates a single sample from the data set. Typically, i will run from 1 to n, where n represents the total number of samples in the dataset. The model itself is defined by f here. It is a function which accepts input data X and depends on parameters P. The function may take many forms, but it must accept input data and be determined by fixed parameters. Finally, we must always recognize that the model is never perfect, so we include the error term e, which represents parts of Y which are not perfectly represented by the function f.

When we talk about AI and machine learning, we are really referring to the function f. When we say it understands something, we mean that its parameters P have been specially tuned to map certain inputs to certain outputs.

Here are some examples. In a face recognition system, the inputs X come in the form of images, and the outputs Y might be in the form of a probability (a value from 0 to 1) representing how likely the image contains the user’s face. In the case of ChatGPT, the inputs come in the form of a text sequence, and the outputs are in the form of a text completion; that is, another text sequence that completes the first one, like the answer to a question.

This framework may sound simple, but modern problems in AI are very complex. To achieve, say, accurate text completion, similarly complicated models need to be conceived. These models are far more complicated than humans can define on their own. For example, the current model of ChatGPT—GPT 4—is estimated to have over 100 trillion parameters P. No amount of manpower could come up with these by hand. It must be done computationally.

That is where Machine Learning comes in. Instead of trying to tune the models by hand, data is used to learn the parameters that define the model. Either by providing labeled examples of data to show what patterns look like or by allowing the computer to identify the patterns on its own, the parameters P in the function f are gradually calculated. If enough data is provided, and the form of the function f is sufficiently complex, then the model will be able to effectively identify the patterns that exist in the dataset.

In other words, the trained model “understands” the knowledge in the data by “learning” the underlying patterns that they contain. When a new data point from outside of the training set is presented, the model tries to place it within the framework of learned patterns and interpolate what the potential output could be. As a result, AI is really good at representing data which is similar to the training data, but terrible at representing data that is not similar. More technically, AI can interpolate very well but cannot extrapolate effectively.

Diminishing Returns from our Mountains of Data

So modern AIs are just highly complex pattern recognition machines. They encode any patterns existing in the data within their architecture and parameters. In this context, what does it mean that data the wells may be drying up? It means that we will start to get diminishing returns from the mountains of data we generate.

If we have exhausted our data sources in training AI, we have essentially taught computers the entirety of human knowledge. This may sound like some wild sci-fi fantasy but just consider the effectiveness of modern systems. Even in the innately human realm of poetry, people cannot distinguish between what is real and what is artificial.9 That AI has mastered human knowledge may not be so far from the truth after all.

But how did we get here? Even just one decade ago, only thinkers on the fringe would have claimed that computer intelligence was commensurate with human intelligence. Now, the debate is carried on by experts from all corners of science, technology, and philosophy. What happened? Simply put, computational power increased… a lot.

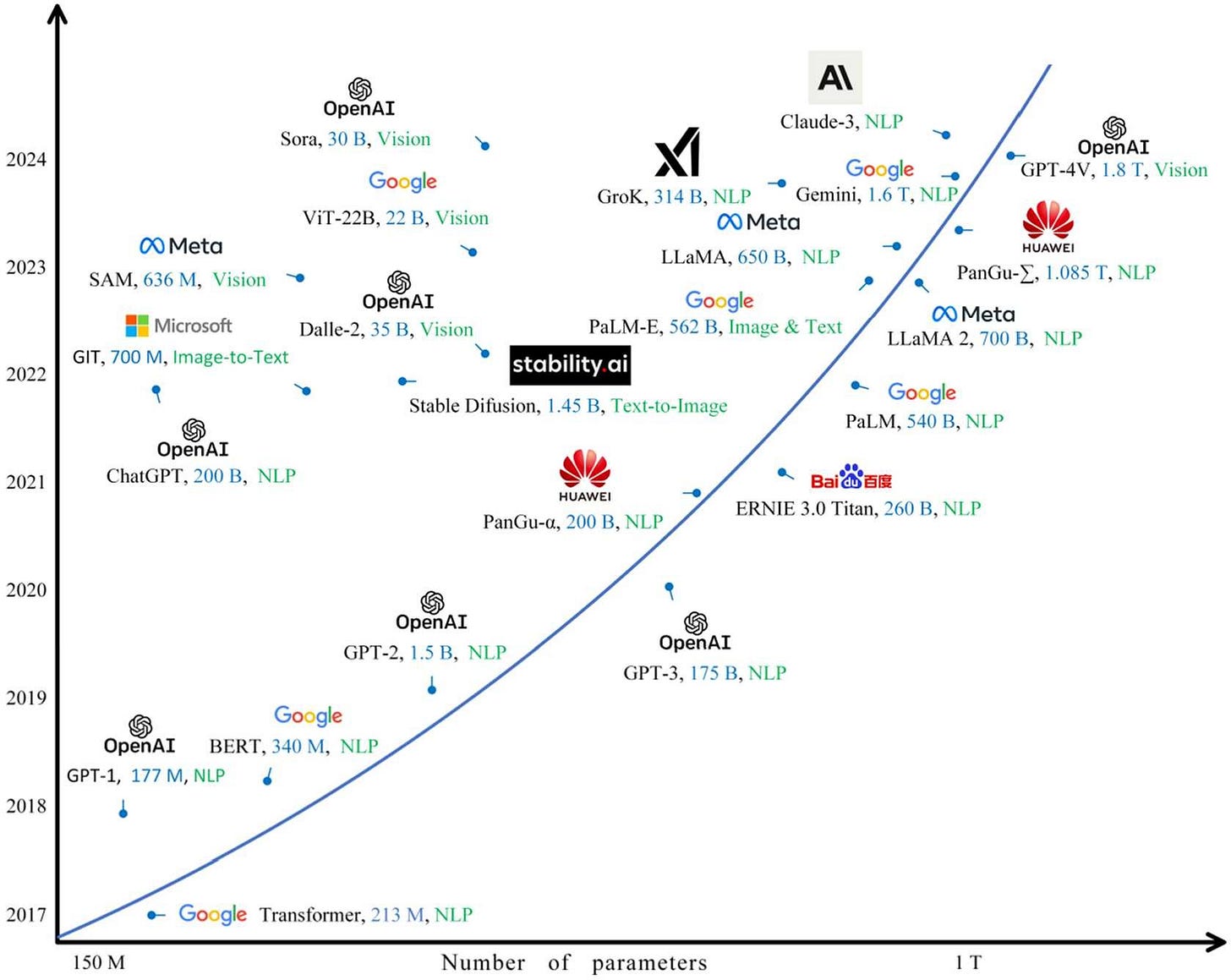

Until recently, there has always been far more data than we could manage. Therefore, the most effective approach to improving AI performance has traditionally been to increase model computational complexity.10 This means stuffing as many parameters as possible to those regression functions we discussed above and training them with as much data and computer power as possible. Below is a figure from an academic review of large AI models, which shows how much their size has increased over time.11 With a growth rate that is still accelerating, the size of OpenAI’s models increased over 10,000 times from GPT-1 to GPT-4V inside a span of less than seven years.

It is with models like these, and the burgeoning computational power required to support their growth, that AI is now able to encode the entirety of human knowledge. While major technical breakthroughs such as the transformer have accelerated its development, our ability to devour vast reservoirs of data has been a primary driver of AI success.

This finally brings me to the point that I have been trying to make: the extent of human knowledge is not proportional to the amount of data that it generates.

Consider some well-known work of literature. Shakespeare’s The Tempest, let’s say. Though Prospero’s tale may now occupy hundreds of Terabytes of hard drive space (that is a lot, for text) across the globe, the story remains unchanged. No amount of replication could instead compel Miranda to exclaim, “O brave new world, that has such data in’t!”

This is not just true of literature, but of history, images, persons, and abstractions. Creating new data cannot modify the substance of its subject. Data is inherently independent from whatever it represents and therefore cannot influence it. Thus, using more data in training AI does not guarantee improved performance. Once models have encoded the repositories of human knowledge, they will no longer be able to meaningfully increase their capacity by simply boosting computational power. They will then have to learn at a pace commensurate to that at which knowledge is generated by humans—that is, at a much slower rate.

Progress in AI is Going to Look Different

I am no clairvoyant, but I suspect that efforts in AI will follow a different tack as fresh data sources become increasingly rare. Recognizing that other avenues exist, I suggest two research directions that I think will be effective, and one that I think is overhyped.

Creative Architectures and Training Schemes. For those who aren’t familiar, architecture in AI jargon means the form of the ML regression function which is trained by data. Most of the big AI models that we have become familiar with—ChatGPT, Claude, Gemini, etc.—are all slight variations on an architectural element known as the Transformer. As data becomes less potent, researchers in the AI industry will advance the science by creating increasingly efficient architectures. While this may only yield marginal improvement in model understanding, it may lead to large decreases in training or usage cost.

This seems to already have begun playing out in reality. DeepSeek, which has caused such a stir lately, does not make significant improvement in model accuracy, but in their cost. They were able to achieve these improvements by implementing creative architectures and training schemes.

AI in the Natural Sciences. When talking about AI, most people probably think of Large Language Models (LLMs) like ChatGPT which are designed for general use. Other forms of ML, however, are often applied to specific applications in science and technology. Scientific models are designed with different goals than an LLM. Typically, much less data is available, that data is often less interpretable than text or images, and hallucinations (where AI generates fake responses) cannot be tolerated. Overcoming these hurdles has been a major thrust of scientific AI research and will, I suspect, continue to be so.

I think that this domain may be the most effective use of AI for actually expanding the breadth of human knowledge. The key point is that AI empowers humans to see further into nature, rather than making discoveries of its own accord.

Synthetic Data. When the AI cognoscenti talk about drying data reservoirs, synthetic data generation often comes up. If we are running out of new data, why not just artificially make more? After all, we can generate realistic images and text quite effectively, these days. I think that this, however, will not be as fruitful as many suspect.

The main problem (and I think there are many) with using synthetic data for model training is that it cannot contain any new information not already encoded in the generator model. So, the best you can do by training a new model on synthetic data is to replicate the understanding in the generator. But the generator itself must have been trained on some set of data, right? Somewhere down the chain, real data is required, and real improvements will require more real data. Any subsequent models developed from synthetic data are only going to be representations of the original training sets.

Therefore, it is foolhardy to think that synthetic data is the antidote for a lack of real data. If we really have captured the extent of human knowledge in AI models, there is no advantage that it can bring. Instead, to train models on synthetic data will only reinforce the biases and inaccuracies encoded in the model parameters, making them less realistic and less reliable. All of this at a high cost, too, since synthetic data is expensive to create.

In Summary

Whether or not we have “exhausted the cumulative sum of human knowledge in AI training” is debatable. However, it is not unfathomable to think that this could one day occur. When that day arrives, we would be prudent to recognize that our data is subject to diminishing returns. It grows disproportionately faster than human knowledge and its gains will eventually saturate. Then, we must make decisions about how to pursue the advancement of technology. If we are not careful to allocate our digital resources responsibly, we may find ourselves ruined.

A Real Photograph of a Real Thing

Generative AI threatens to desensitize our experience beauty in natural reality. In revolt, I present a real photograph, created, not by the marching bits in a mechanical brain, but by the reflection of light on and through physical media.

Lately, I have come to enjoy abstract photography. Perhaps it is because I am so deeply saturated in natural images that I occasionally need new perspectives to stay engaged. Whatever the reason, I find the generality and emotion of abstract artwork compelling. I wonder if you can tell how this image was made?

See, for example, The World Will Store 200 Zettabytes Of Data By 2025, Zettabyte Era - Wikipedia.

For these calculations I am using specs from Supermicro Cloud-Density Storage servers. In a 4 Unit chassis, these store up to 1.8 Petabytes of data. To meet global data demands, roughly 97 million of these would be needed, each at roughly $60,000 a pop. Their physical dimension is about 0.48-by-0.8-by-0.175 meters.

For this I am using the following estimates for data sizes: 8k video—200 Gb per hour; High-res image—25 Mb; average email—75 kb. These figures are, of course, estimates. I found them on Google ;)

For this, I estimated the size of a 300-page book to be 1 Mb.

See, for example, this article on Space.com, where multiplying the number of stars estimated in the Milky Way is multiplied by the number of observed galaxies to yield roughly 10 to the power of 24—one septillion—stars.

Musk’s comments echo those by OpenAI cofounder Ilya Surtskever at the academic NeurIPS conference, who thinks that AI industry has reached “peak data.” This study by the Data Provenance Initiative at MIT is also worth considering, which states that the data repositories which AI models are trained on are being severely diminished through data restrictions.

I wrote an entire post on this phenomenon. Not only is AI poetry indistinguishable from human-written poetry, it is preferred. Read more about it here: Reclaiming Poetry.

Sometimes this is referred to as Sutton’s Bitter Lesson, which implies that trying to come up with smart solutions to a given problem is less effective than simply waiting for computers to become capable enough to support more general models. It can be seen as a philosophical topic, but seems to be borne out in practical results.